Continue

✅ Multiple AI Providers available, including Watsonx!

Start Here The Db2 for IBM i extension, as of 1.6.3, has the ability to integrate with specific AI extensions:

Continue

✅ Multiple AI Providers available, including Watsonx!

GitHub Copilot

✅ Requires GitHub Copilot licence

The Db2 for i SQL code assistant provides intregrations with AI code assistants such as GitHub Copilot Chat and Continue. These integrations allow you to ask questions about your Db2 for IBM i database, and get help with writing SQL queries. The best part is that you can do this directly from your VS Code editor.

Common Use cases of the SQL code assistant include:

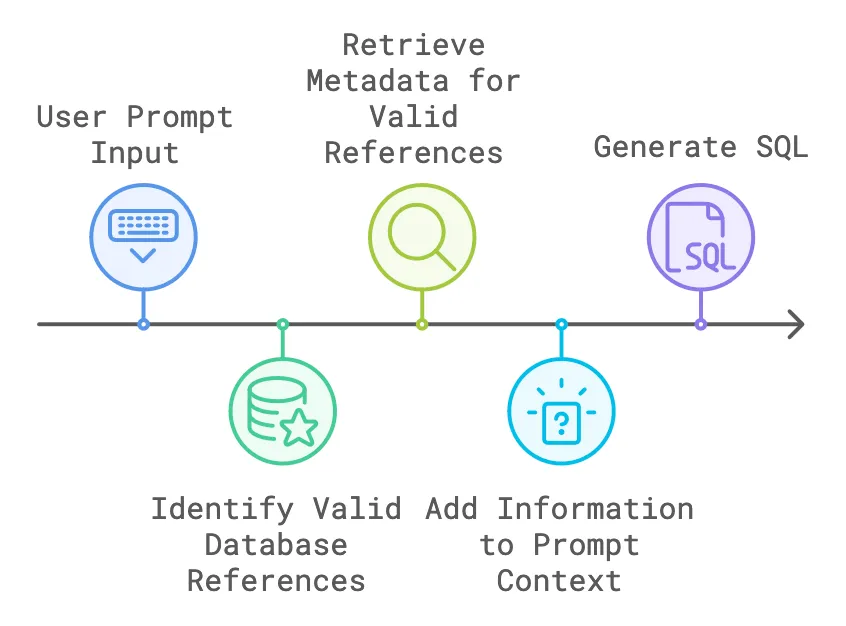

The @db2i GitHub Copilot chat participant and the @Db2i Continue context provider work in the same way by extracting relevant database information from the user prompt. Here is breakdown of the algorithm:

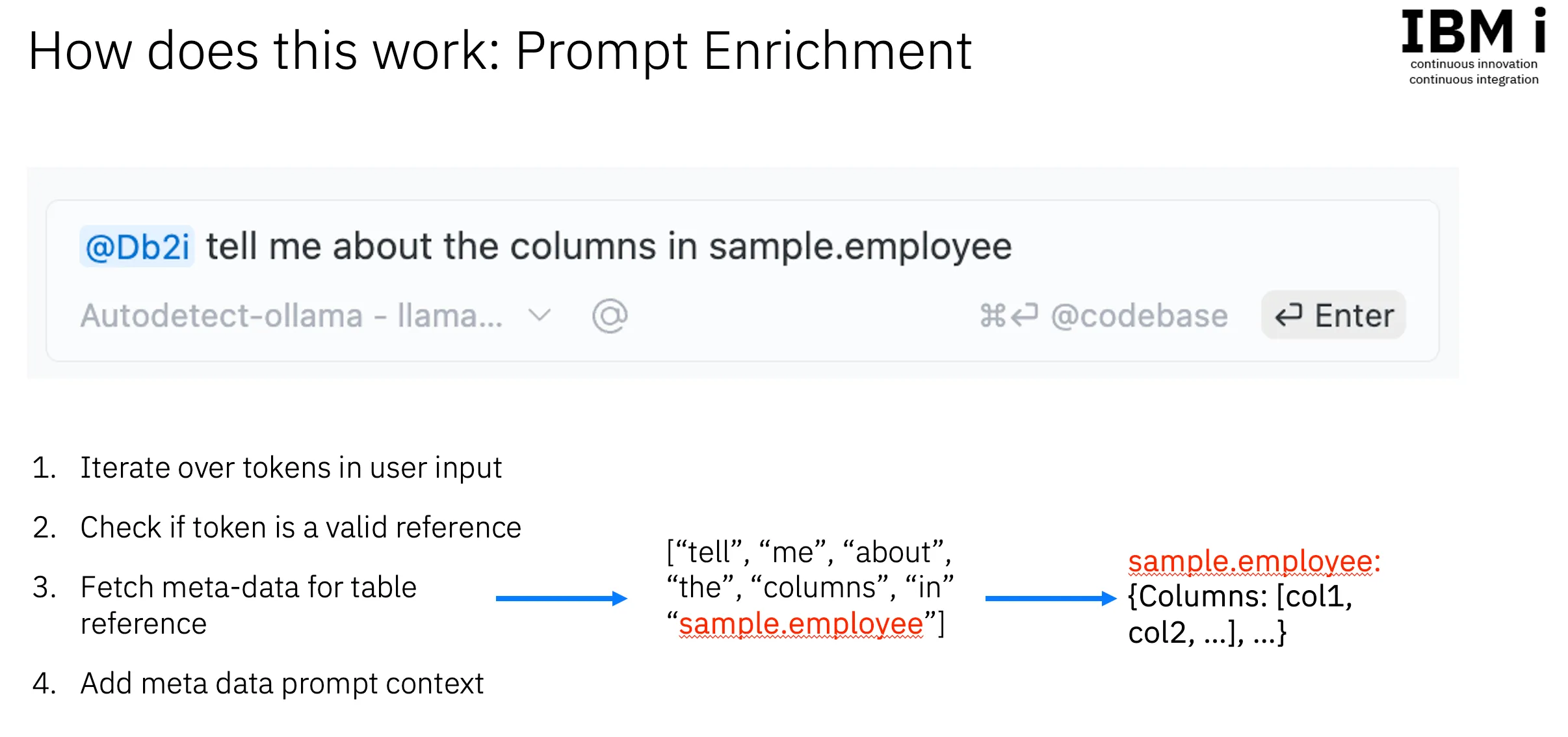

Given a prompt like:

tell me about the columns in sample.employeesample.employee is recognized as a valid table reference.sample.employee), then the agent knows to look in the sample schema/library. If you use an unqualified reference (e.g. employee), then the agent will look in the library list of the active SQL job to resolve the object.SQL Job Manager in the Db2 for i VSCode extension, which queries relevant database information based on the user’s active connection.let’s break down the process of how the @db2i chat participant and the @Db2i context provider work:

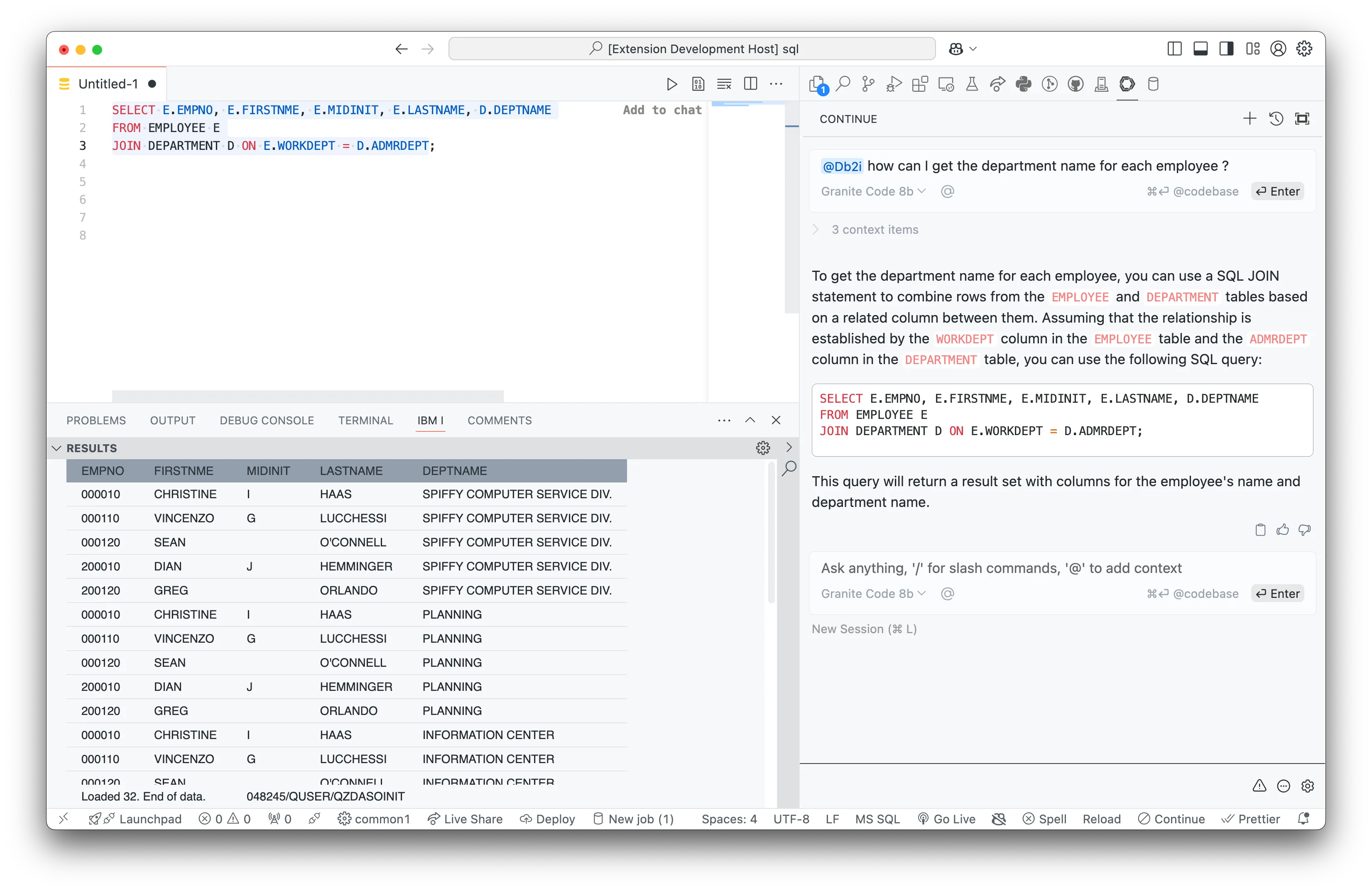

@Db2i Context Provider

@Db2i context provider.How can I get the department name for each employee ?employee and department tables, the model generates an accurate SQL JOIN statement.By integrating database context directly into our prompts, we make SQL generation not only faster but more accurate and insightful.

If you do not want to share your data with any AI services, then do not invoke the functionality through VS Code. For example, we only fetch metadata when the user explicitly requests it through the chat windows. We do not fetch any metadata without the user explicitly using the chat windows in either Copilot Chat or Continue. Simply don’t install the extensions, or don’t use the @db2i context.

QSYS2.SYSCOLUMNS2, QSYS2.SYSKEYCST and results from QSYS2.GENERATE_SQL.The @db2i chat participant and the @Db2i context provider are designed to provide accurate and insightful SQL generation based on the user’s database context. However, there are some limitations to keep in mind:

@db2i chat participant and the @Db2i context provider rely on the active SQL Job’s schema to resolve table references. If the schema is not set or is incorrect, the model may not be able to resolve table references accurately.@db2i chat participant and the @Db2i context provider are optimized for generating simple SQL queries. For more complex queries, the model may not be able to provide accurate results.@db2i chat participant and the @Db2i context provider do not store or transmit any user data. All database metadata is fetched from the SQL Job Manager based on the user’s active connection.@db2i chat participant and the @Db2i context provider are continuously being improved to provide more accurate and insightful SQL generation. If you encounter any issues or have feedback, please let us know!