GitHub Copilot

✅ Requires GitHub Copilot licence

Dette indhold er ikke tilgængeligt i dit sprog endnu.

New! The Db2 for IBM i extension, as of 1.6.3, has the ability to integrate with specific AI extensions:

GitHub Copilot

✅ Requires GitHub Copilot licence

Continue

✅ Multiple AI Providers available, including Watsonx!

The Db2 for i SQL code assistant provides intregrations with AI code assistants such as GitHub Copilot Chat and Continue. These integrations allow you to ask questions about your Db2 for IBM i database, and get help with writing SQL queries. The best part is that you can do this directly from your VS Code editor.

Common Use cases of the SQL code assistant include:

You can use GitHub Copilot, with the @db2i chat participant, to ask questions either about Db2 for IBM i or about specific tables in the current schema of the selected job. You can ask things like:

@db2i What columns make up an employee?@db2i Can you get the department name for each employee?@db2i Write a select statement to count how many employees are in each departmentInstall GitHub Copilot from the VS Code Marketplace.

Install the Db2 for IBM i extension from the VS Code Marketplace.

Connect to your IBM i system using the Code for IBM i extension.

Once connected, the @db2i chat participant is available in the chat window. You can ask questions about your database, and get help with writing SQL queries.

Once GitHub Copilot is installed, you can ask questions about your database using the @db2i chat participant. Here are some examples:

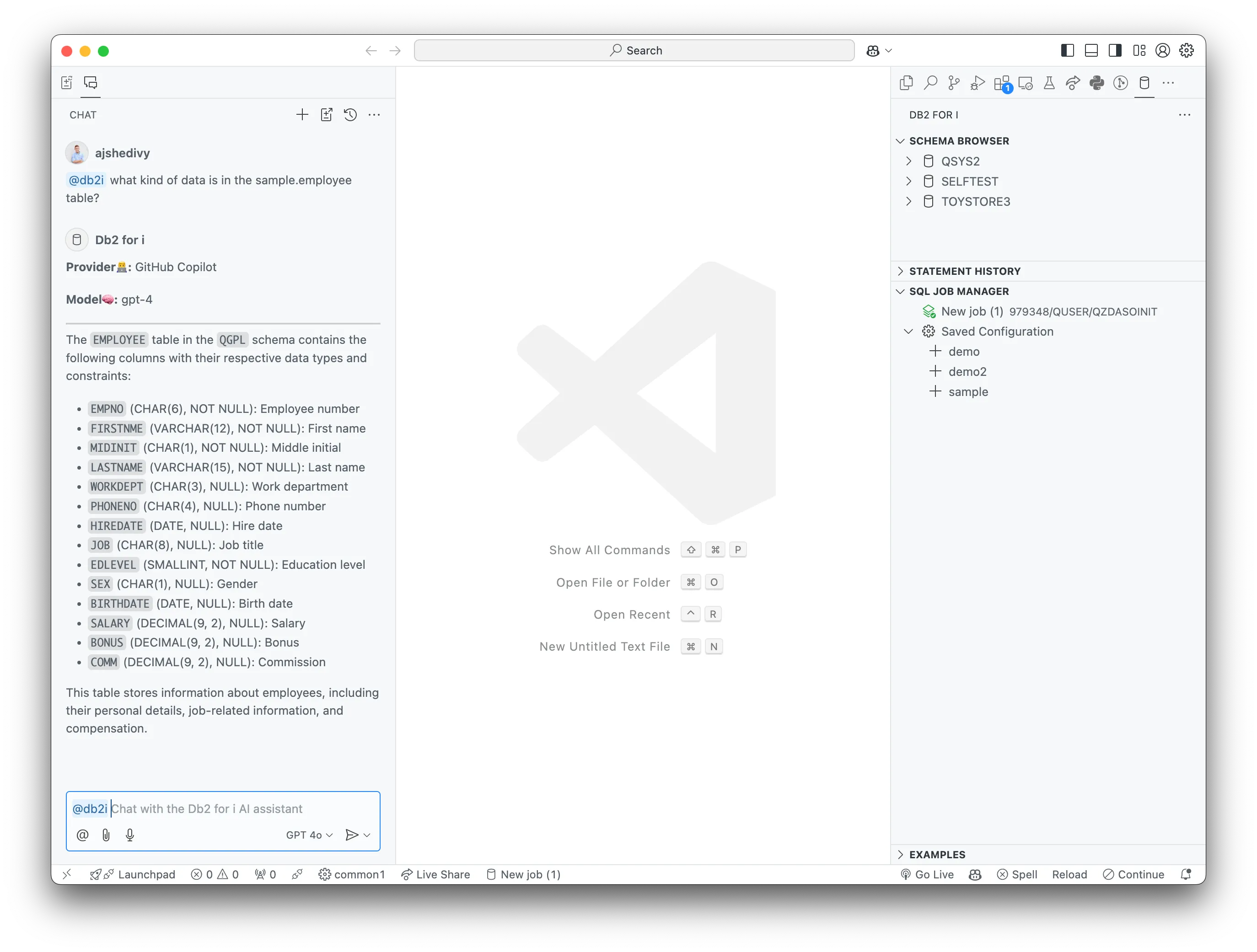

Example 1 Summarize the columns in the EMPLOYEE table

This is a simple example to show how you can ask questions about the structure of your database. You can ask the chat participant to summarize the columns in a table.

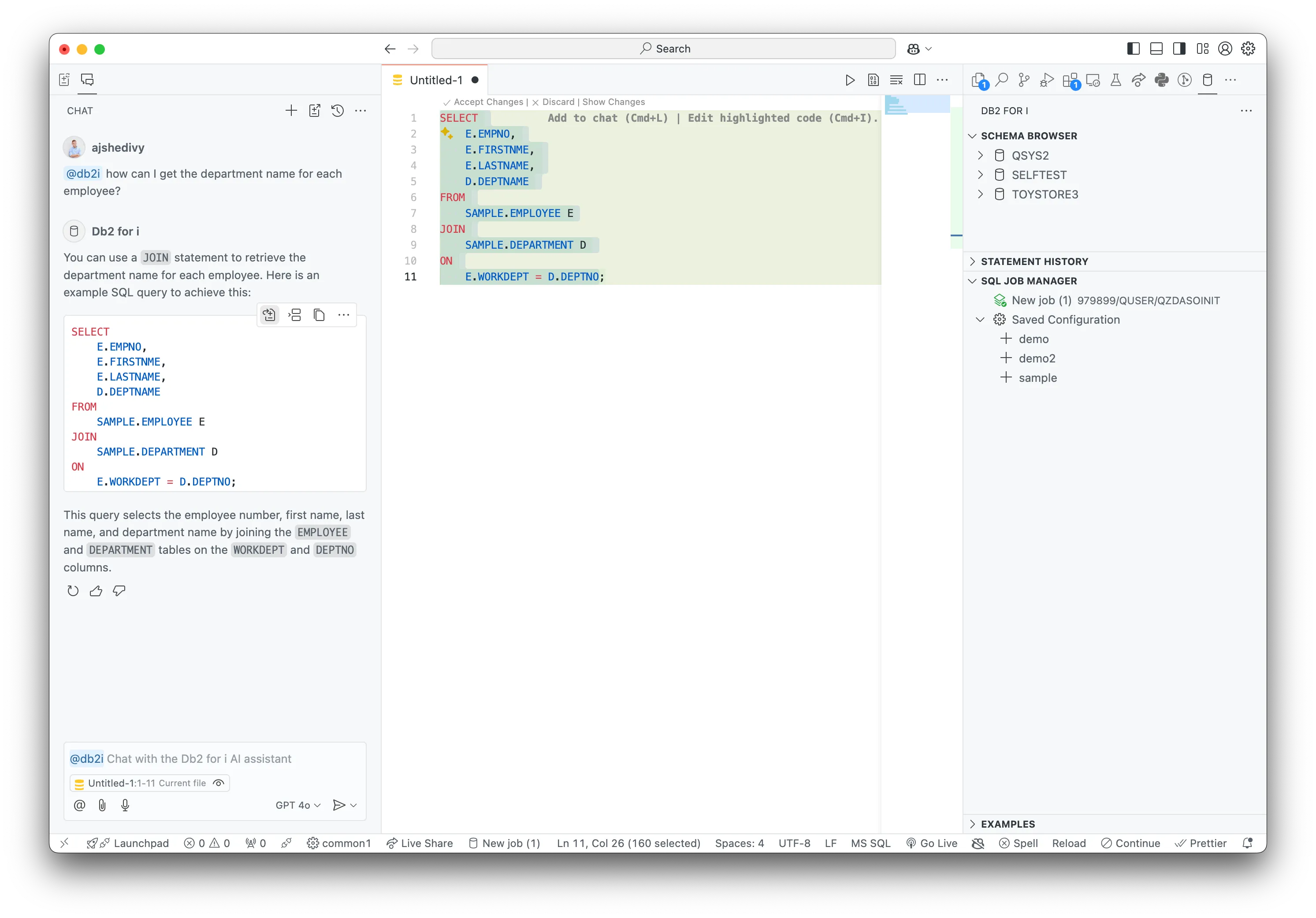

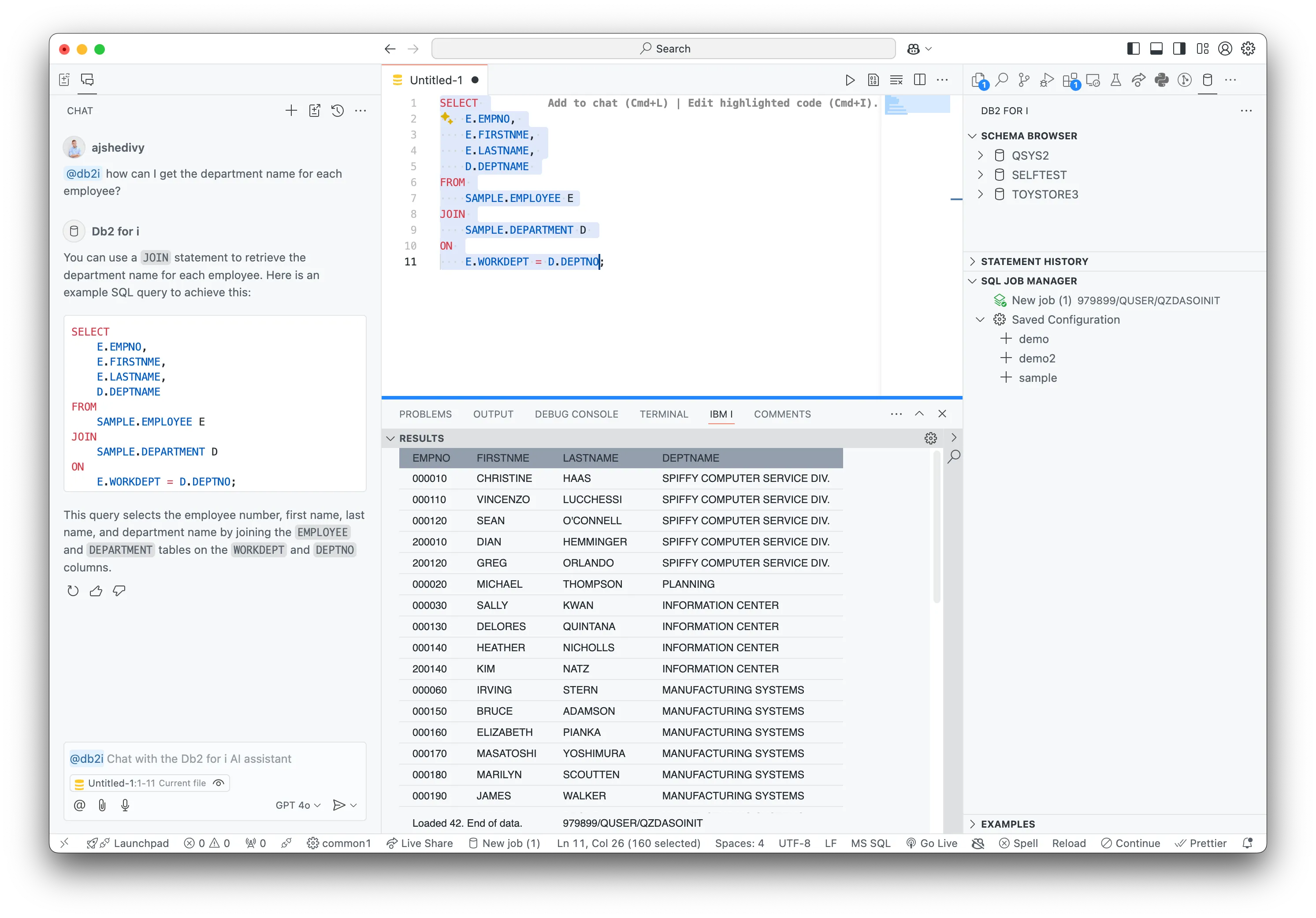

Example 2: Get the department name for each employee

This example shows how you can ask the chat participant to write a query that joins two tables to get the department name for each employee. The chat participant will generate the SQL query for you that you can run directly in your SQL editor in VS Code.

Run the Generated SQL to get the result:

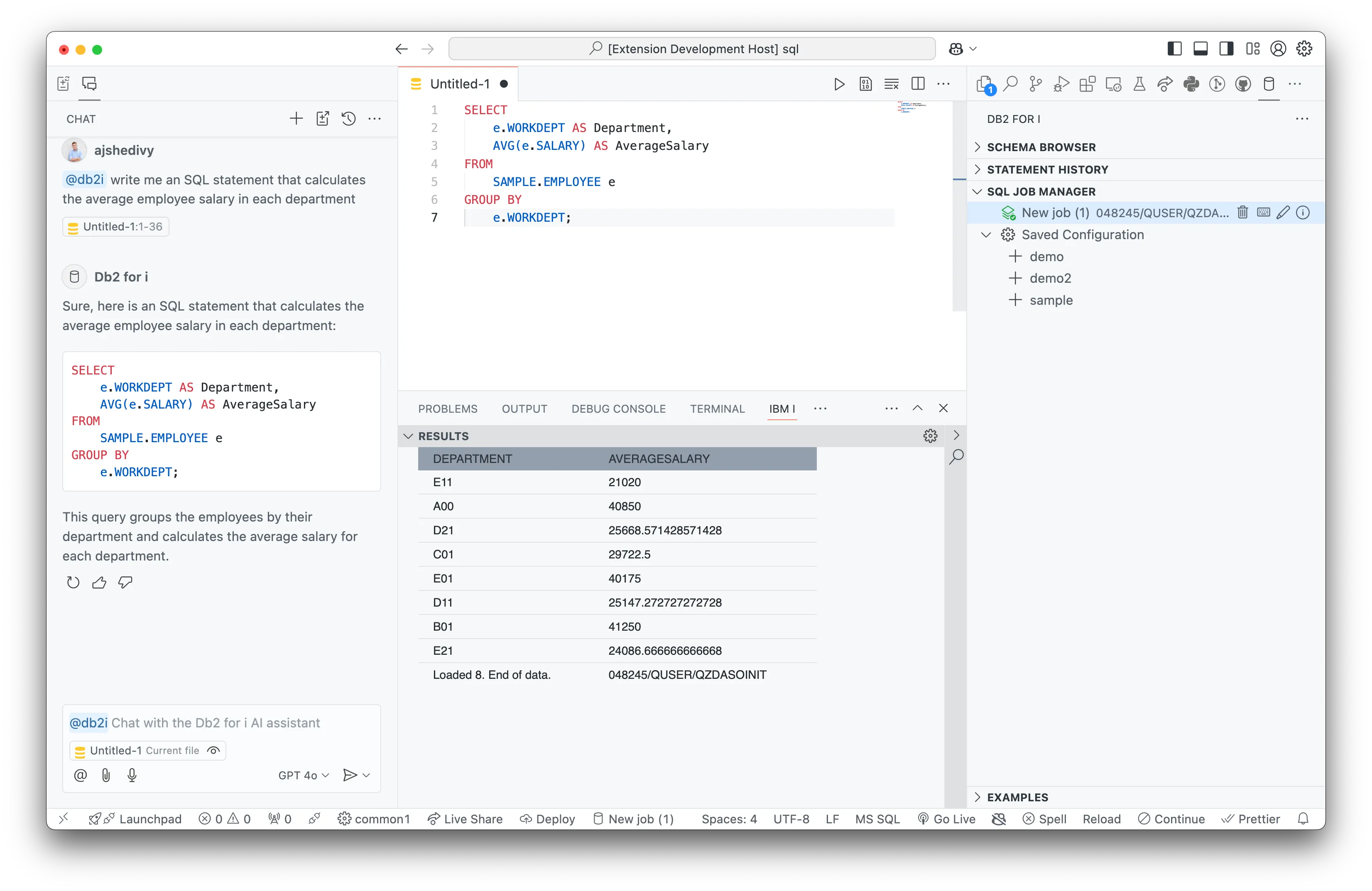

Example 3: Calculate the Average salary for each department

A slightly more complex example that shows how you can ask the chat participant to write a query that calculates the average salary for each department.

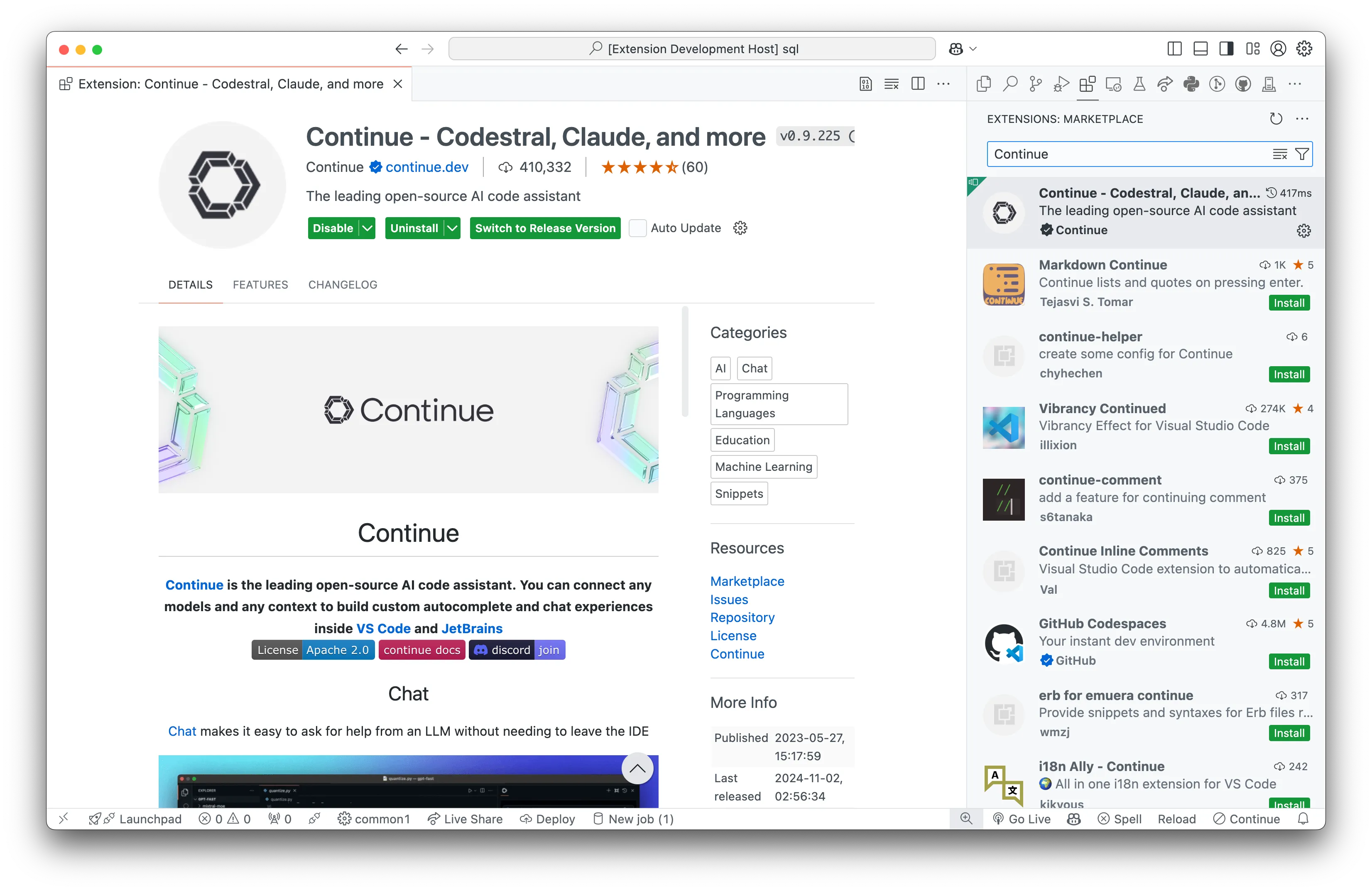

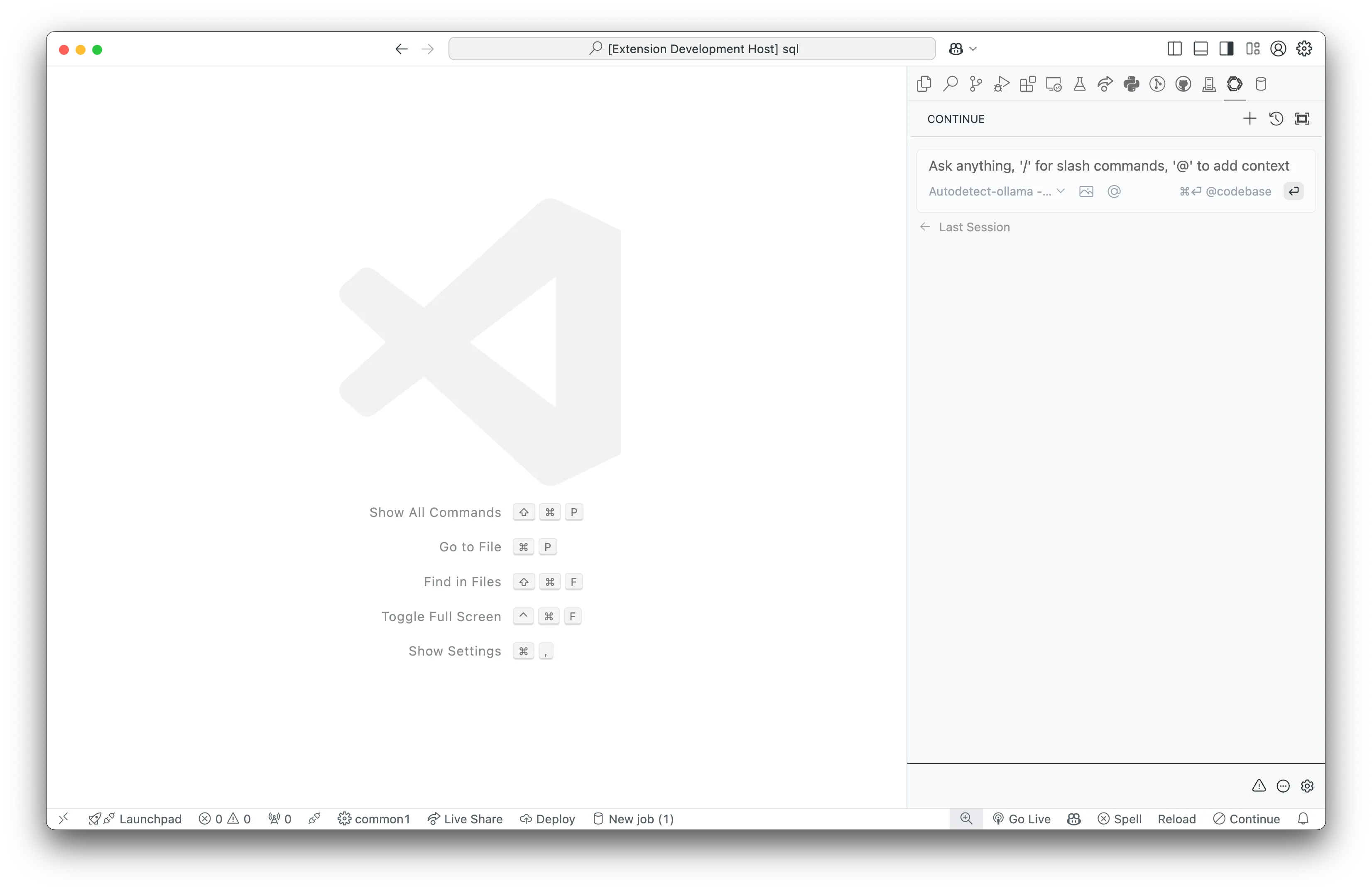

Continue is the leading open source AI code assistant for VS Code. It provides a wide range of AI features:

Once you have the extension installed, you can configure the AI provider you want to use. Continue supports multiple AI providers (including Watsonx!). You can choose the provider you want to use by clicking on the settings icon in the chat window.

For demonstration purposes, we will use the Ollama Provider for hosting LLMs locally on your machine.

Here is a step-by-step guide to setting up the Ollama provider with the IBM Granite models in Continue:

Install Ollama on your machine by following the link below:

The IBM Granite 3.0 models are available in the Ollama model registry. More information about the IBM Granite models can be found here.

Using the Ollama CLI, fetch the IBM Granite 3.0 8b model by running the following command:

ollama pull granite3-dense:8bOpen the VSCode Command Palette (Press ctrl+shift+p) and search for Continue: open config.json. This will open the Continue central config file $HOME/.continue/config.json in your editor. To enable the Granite models in Ollama, add the following configuration to the models section:

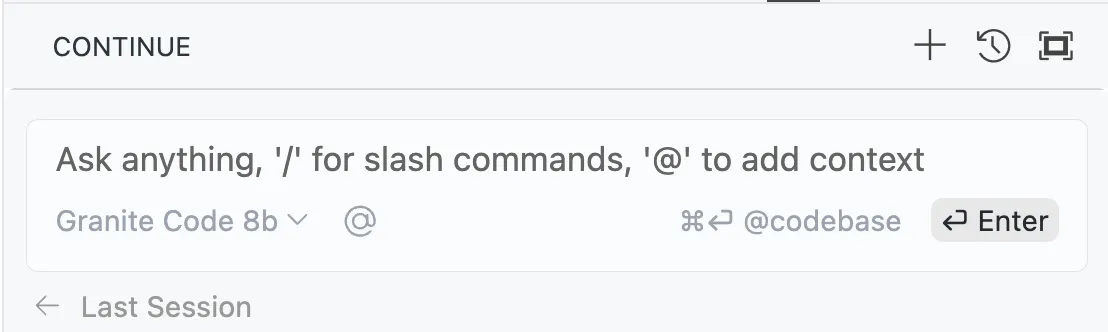

"models": [ { "title": "Granite Code 8b", "provider": "ollama", "model": "granite3-dense:8b" } ],save this file and select the Granite model in the chat window.

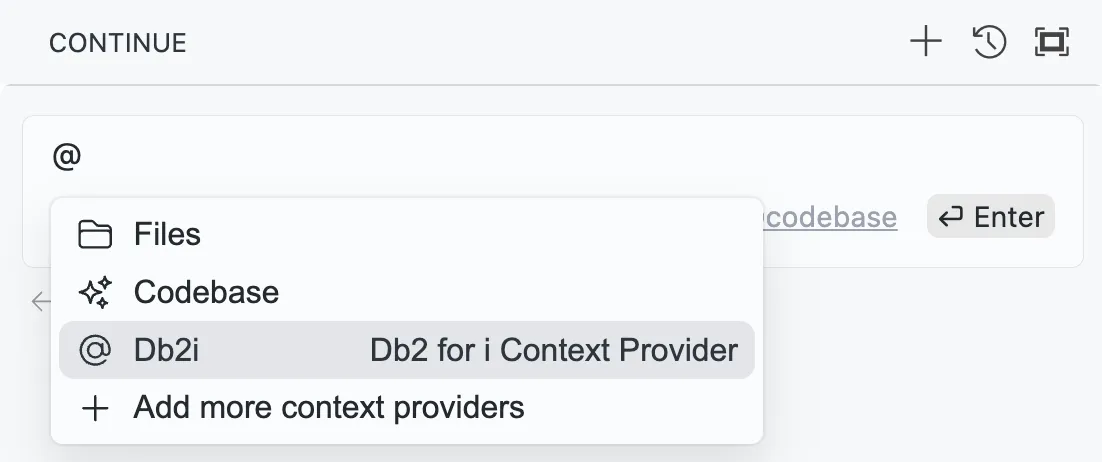

Once you have the extension installed and the AI provider configured, you can ask questions about your database using the chat window using the @db2i context provider. In Continue, a context provider is very similar to a chat participant in GitHub Copilot. It provides additional context to the AI model to help it generate more accurate SQL queries.

More on context providers can be found here.

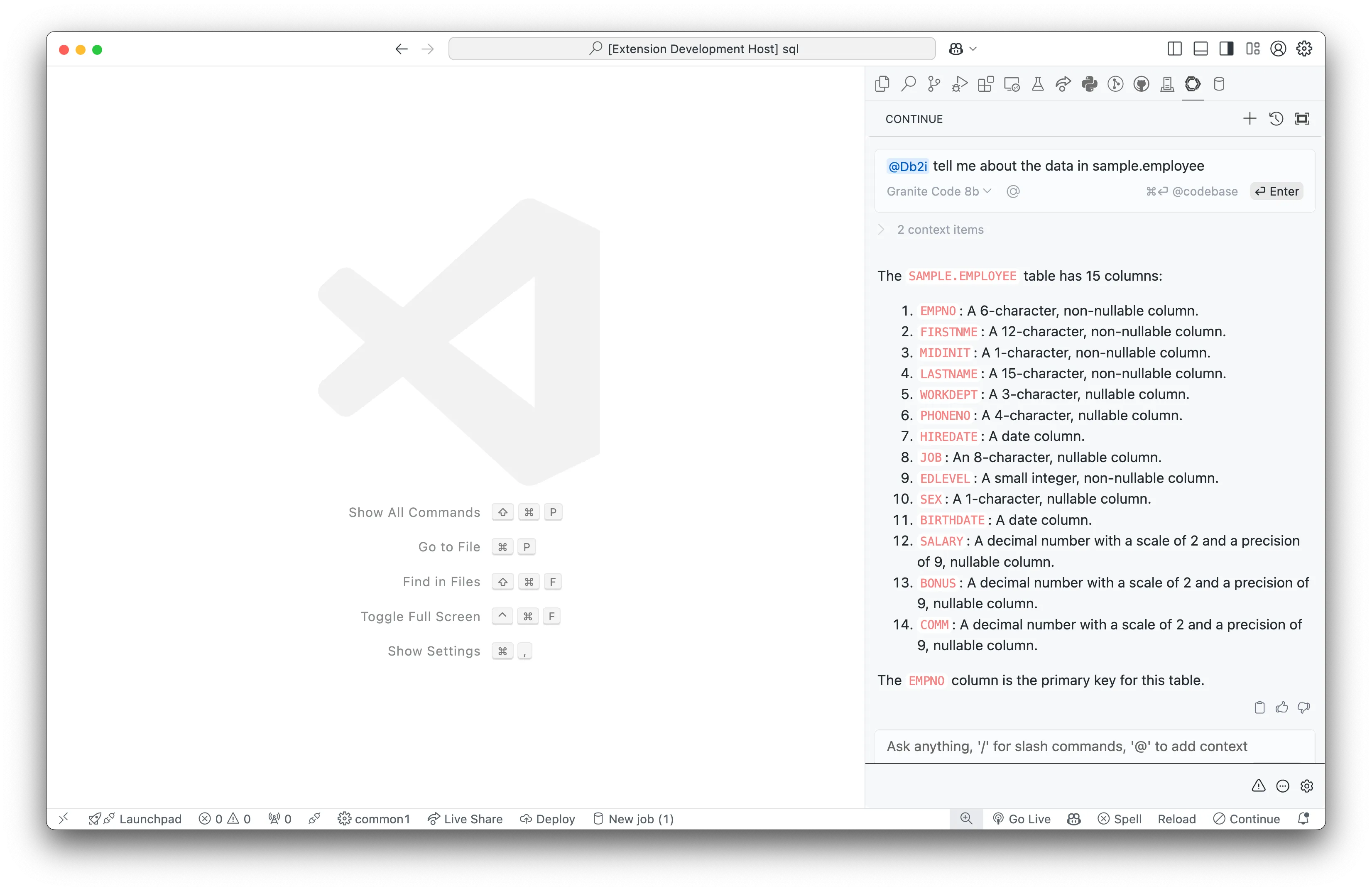

Example 1: Summarize the columns in the EMPLOYEE table

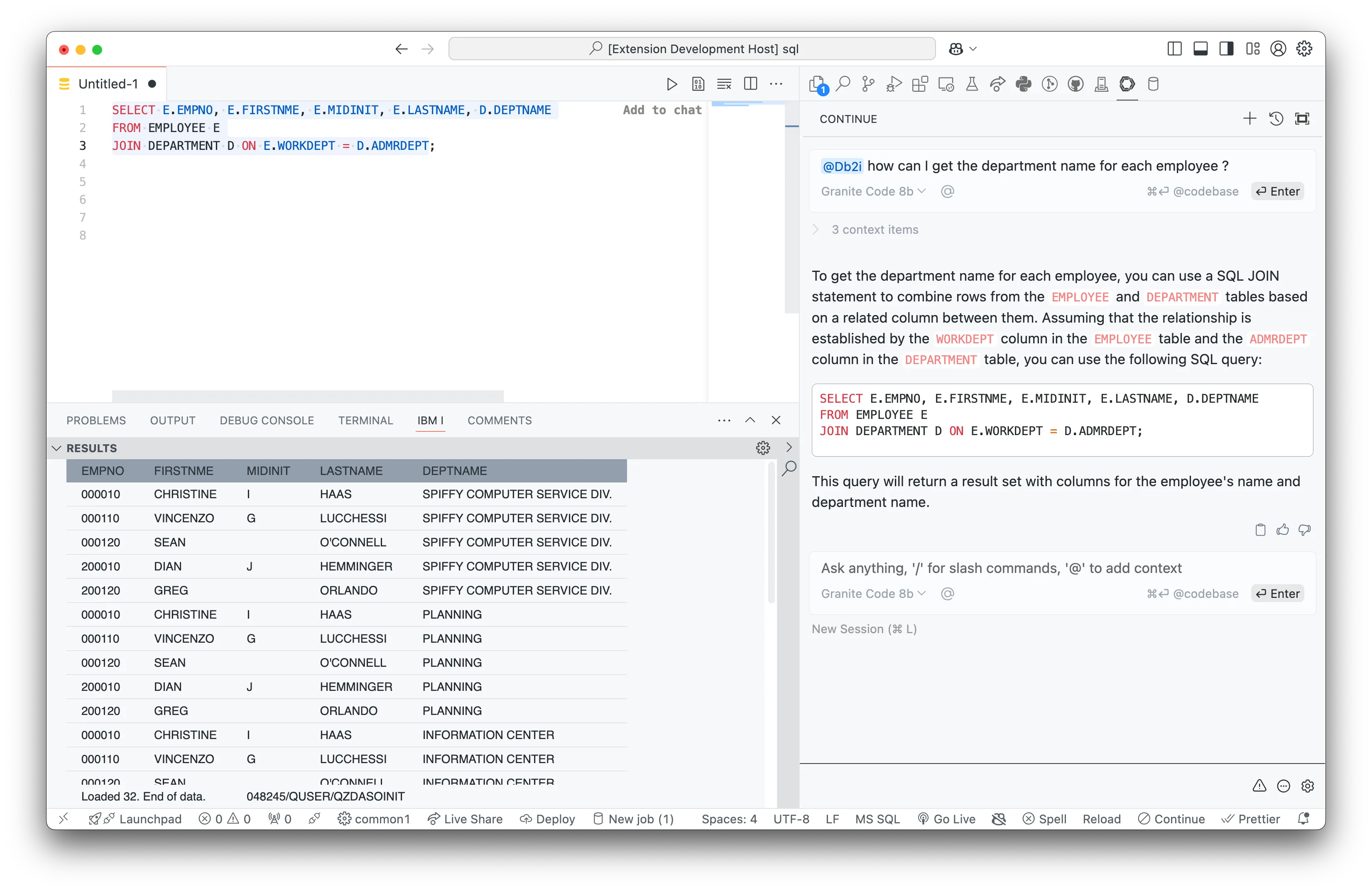

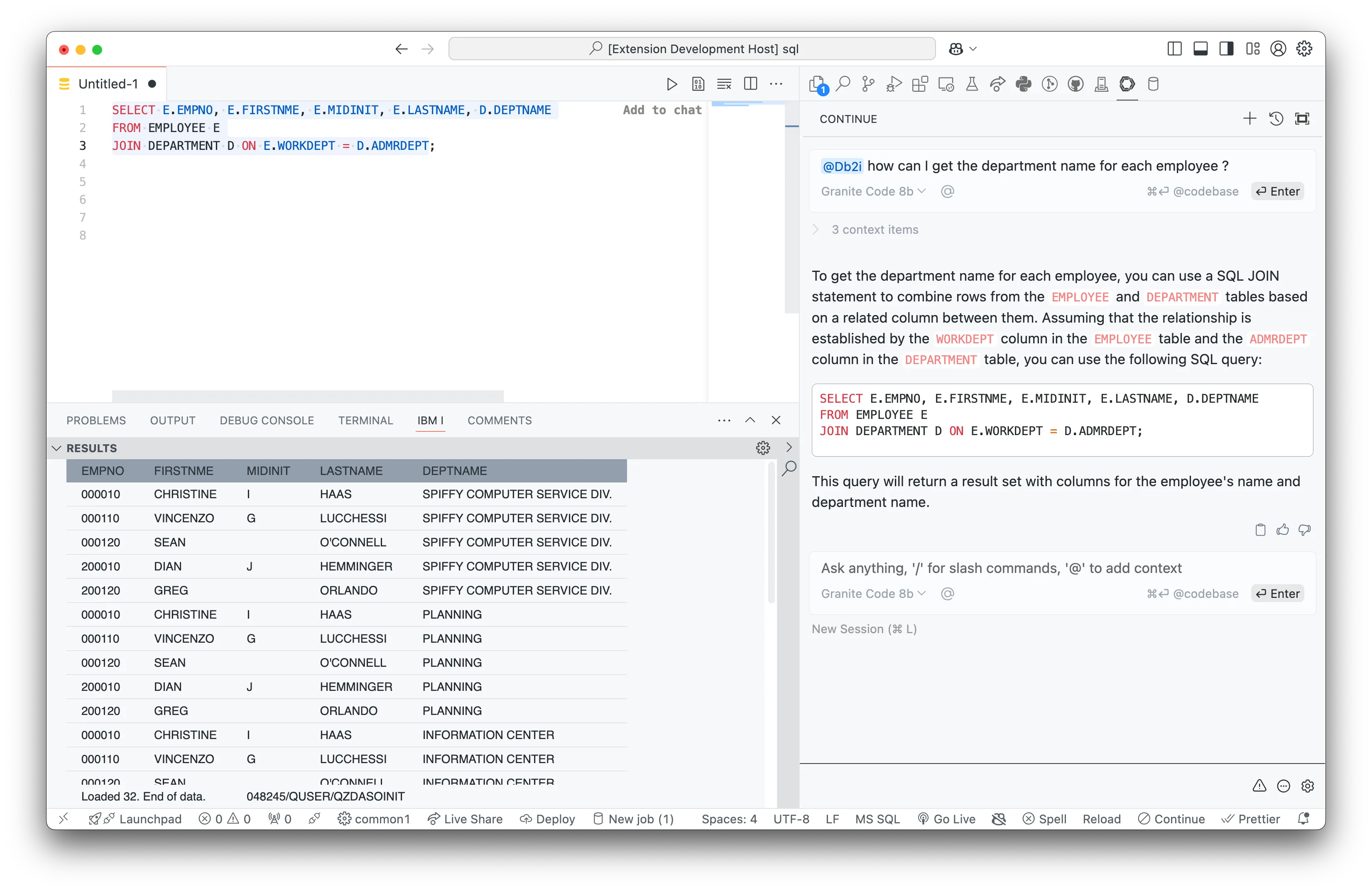

Example 2: Get the department name for each employee

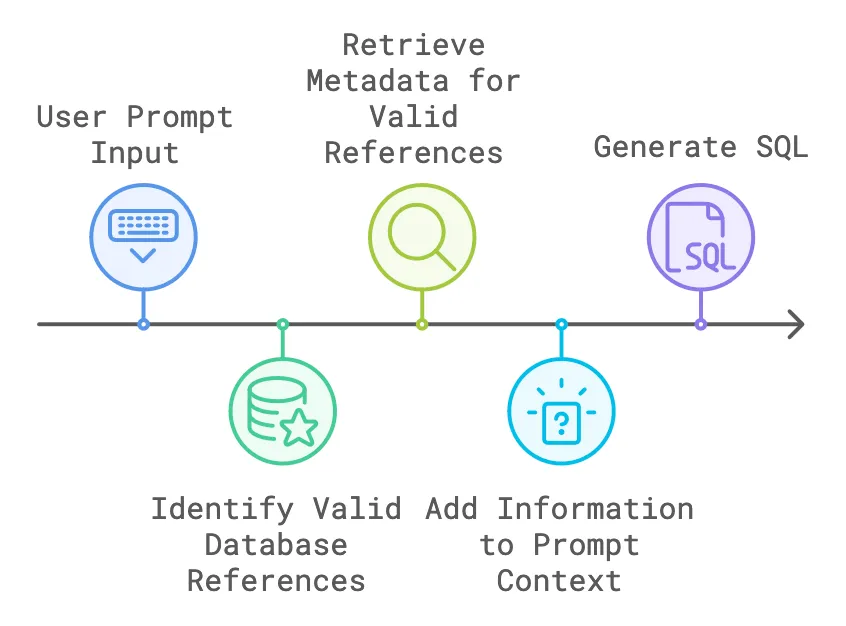

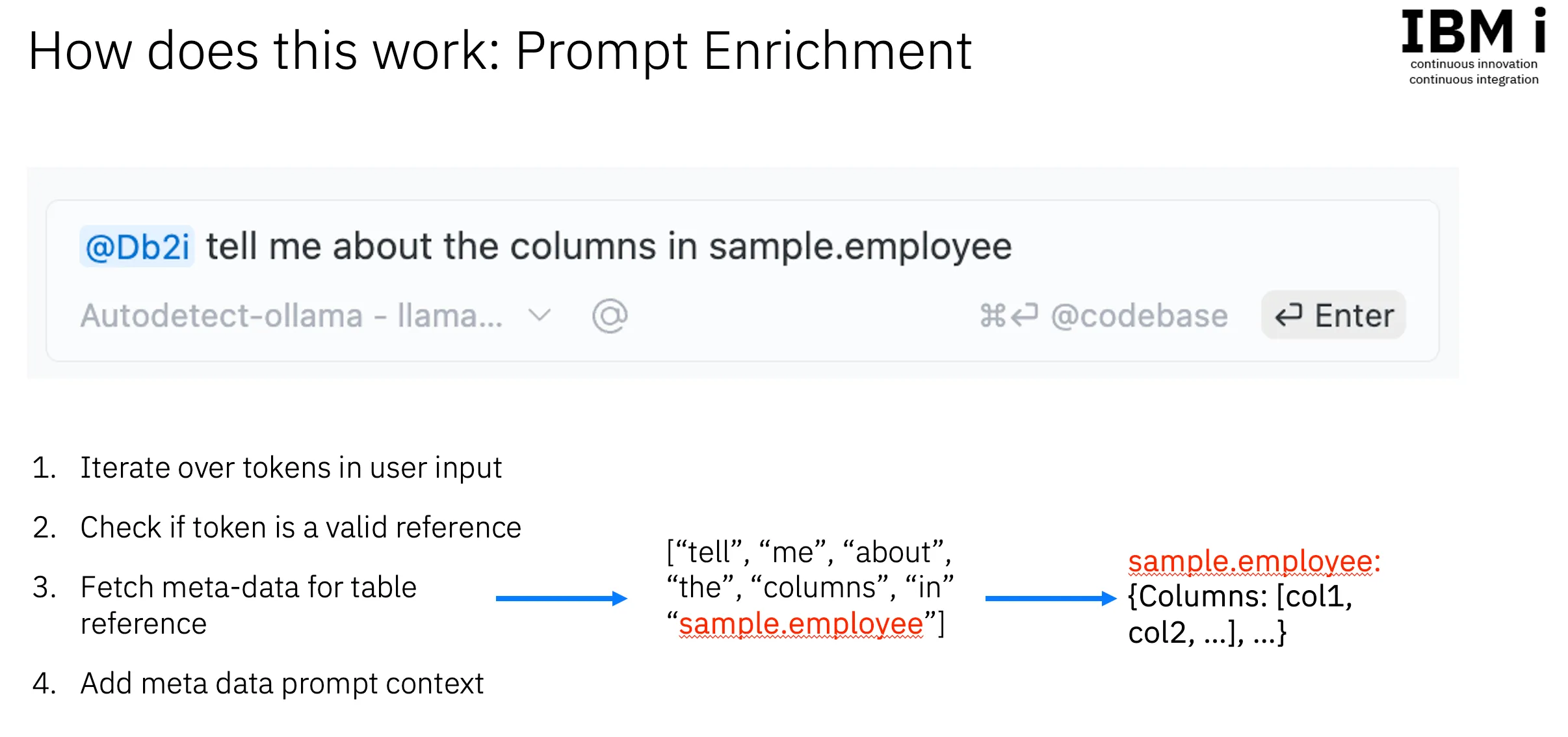

The @db2i GitHub Copilot chat participant and the @Db2i Continue context provider work in the same way by extracting relevant database information from the user prompt. Here is breakdown of the algorithm:

Given a prompt like:

tell me about the columns in sample.employeesample.employee is recognized as a valid table reference.SQL Job Manager in the Db2 for i VSCode extension, which queries relevant database information based on the user’s active connection.let’s break down the process of how the @db2i chat participant and the @Db2i context provider work:

@Db2i Context Provider

@Db2i context provider.How can I get the department name for each employee ?employee and department tables, the model generates an accurate SQL JOIN statement.By integrating database context directly into our prompts, we make SQL generation not only faster but more accurate and insightful.

If you do not want to share your data with any AI services, then do not invoke the functionality through VS Code. For example, we only fetch metadata when the user explicitly requests it through the chat windows. We do not fetch any metadata without the user explicitly using the chat windows in either Copilot Chat or Continue. Simply don’t install the extensions, or don’t use the @db2i context.

QSYS2.SYSCOLUMNS2 and QSYS2.SYSKEYCST in the context based on user input, including table names, column names, types and commentsTABLE(QSYS2.SYSTEM_STATUS(RESET_STATISTICS=>'YES',DETAILED_INFO=>'ALL')) when requested by the user.The @db2i chat participant and the @Db2i context provider are designed to provide accurate and insightful SQL generation based on the user’s database context. However, there are some limitations to keep in mind:

@db2i chat participant and the @Db2i context provider rely on the active SQL Job’s schema to resolve table references. If the schema is not set or is incorrect, the model may not be able to resolve table references accurately.SAMPLE.EMPLOYEE@db2i chat participant and the @Db2i context provider are optimized for generating simple SQL queries. For more complex queries, the model may not be able to provide accurate results.@db2i chat participant and the @Db2i context provider do not store or transmit any user data. All database metadata is fetched from the SQL Job Manager based on the user’s active connection.@db2i chat participant and the @Db2i context provider are continuously being improved to provide more accurate and insightful SQL generation. If you encounter any issues or have feedback, please let us know!